The acquisition of manipulation skills in robotics involves the combination of object recognition, action-perception coupling and physical interaction with the environment. Several learning strategies have been proposed to acquire such skills. As for humans and other animals, the robot learner needs to be exposed to varied situations. It needs to try and refine the skill many times, and/or needs to observe several attempts of successful movements by others to adapt and generalize the learned skill to new situations. Such skill is not acquired in a single training cycle, motivating the need to compare, share and re-use the experiments.

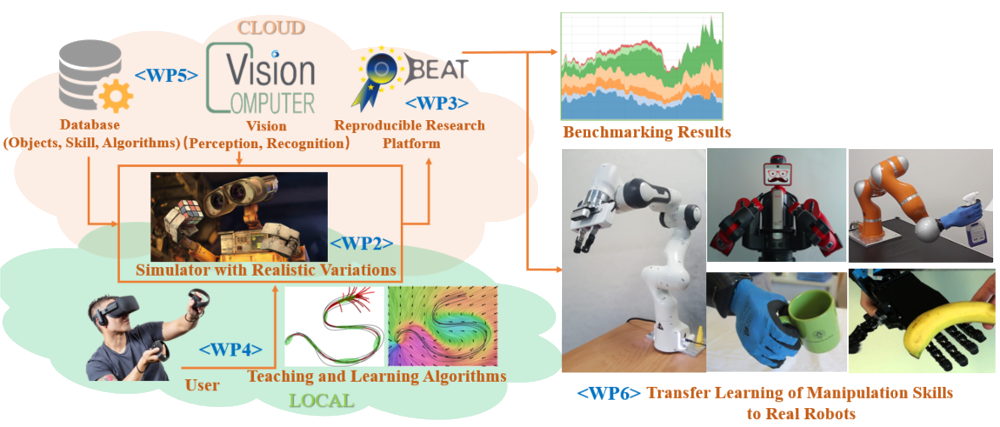

In LEARN-REAL, we propose to learn manipulation skills through simulation for object, environment and robot, with an innovative toolset comprising: 1) a simulator with realistic rendering of variations allowing the creation of datasets and the evaluation of algorithms in new situations; 2) a virtual-reality interface to interact with the robots within their virtual environments, to teach robots object manipulation skills in multiple configurations of the environment; and 3) a web-based infrastructure for principled, reproducible and transparent benchmarking of learning algorithms for object recognition and manipulation by robots.

These features will extend existing softwares in several ways. 1) and 2) will capitalize on the widespread development of realistic simulators for the gaming industry and the associated low-cost virtual reality interfaces. 3) will harness the existing BEAT platform developed at Idiap, which will be extended to object recognition and manipulation by robots, including the handling of data, algorithms and benchmarking results. As use case, we will study the scenario of vegetable/fruit picking and sorting.

Thomas Duboudin, Maxime Petit and Liming Chen, Toward a Procedural Fruit Tree Rendering Framework for Image Analysis, 7th Int. Workshop on Image Analysis Methods in the Plant Sciences (IAMPS), Lyon (France), 2019.

Poster and Oral Presentation

Code: https://github.com/tduboudi/IAMPS2019-Procedural-Fruit-Tree-Rendering-Framework

Lingkun Luo, Liming Chen, Shiqiang Hu, Ying Lu and Xiaofang Wang, Discriminative and Geometry-Aware Unsupervised Domain Adaptation, IEEE Transactions on Cybernetics, Accepted in December 2019.

Ying Lu, Lingkun Luo, Di Huang, Yunhong Wang and Liming Chen, Knowledge Transfer in Vision Recognition: A Survey, ACM Computing Surveys, 2020.

Sunny Katyara, Fanny Ficuciello, Darwin G. Caldwell, Fei Chen, Bruno Siciliano, Reproducible Pruning System on Dynamic Natural Plants for Field Agricultural Robots, Human-Friendly Robotics (HFR 2020), Springer Proceedings in Advanced Robotics, 2020.

Carlo Rizzardo, Sunny Katyara, Miguel Ferandes, Fei Chen, The Importance and the Limitations of Sim2Real for Robotic Manipulation in Precision Agriculture, 2nd Workshop on Closing the Reality Gap in Sim2Real Transfer for Robotics in Robotics: Science and Systems (RSS2020), 2020.

Gao, X., Silvério, J., Pignat, E., Calinon, S., Li, M. and Xiao, X., (2021). Motion Mappings for Continuous Bilateral Teleoperation, IEEE Robotics and Automation Letters (RA-L), 2021.

Gao, X., Silvério, J., Calinon, S., Li, M. and Xiao, X., (2021). Bilateral Teleoperation with Object-Adaptive Mapping, Complex & Intelligent Systems.

Shetty, S., Silvério, J. and Calinon, S., (2022). Ergodic Exploration using Tensor Train: Applications in Insertion Tasks , IEEE Trans. on Robotics (T-RO), 38:2, 906-921

Sunny Katyara, Fanny Ficuciello, Darwin G. Caldwell, Bruno Siciliano, Fei Chen, Leveraging Kernelized Synergies on Shared Subspace for Precision Grasp and Dexterous Manipulation, IEEE Transactions on Cognitive and Developmental Systems (TCDS), submitted.